Cloud Simulation - case study

Will the cloud technology replace local HPC installations for reservoir simulations?

An insight given by early adaptors of the technology, on the pros and cons of a fast-evolving field.

Introduction

High Performance Computing (HPC) is now widely available on the web, through companies such as Amazon Web Services (AWS), Microsoft Azure and Google Compute Engine. Large scale computing resources can allow reservoir simulations to be performed at high resolution and using multiple realizations, for hydrocarbon recovery and carbon dioxide storage modelling. Cloud computing can offer a flexible alternative to in-house clusters, and a means for smaller groups to access large-scale computing resources.

When OpenGoSim (OGS) started, in 2015, we wanted to tackle large-scale studies, but we had no resources to purchase and maintain a big cluster, so cloud computing was the only way to go. This article discusses our experience with this technology, mainly on the AWS platform.

Benefits

The main attraction of using cloud computing is the ability to access large-scale compute clusters consisting of many CPU nodes and GPU accelerators, at short notice, without having the financial burden of owning, maintaining and upgrading such a system. The scale of cloud computing vendors means that a cluster of hundreds of cores can be obtained at reasonably short notice, avoiding the delays that can occur when sharing an in-house cluster.

A cloud solution has the advantage of facilitating cooperation. Once a model is in the cloud, reservoir engineers with access to the data can directly suggest input modifications to each other and share results. Negative aspects are the time and bother involved in the upload and download of data and results, and concerns about security and confidentiality.

Performance

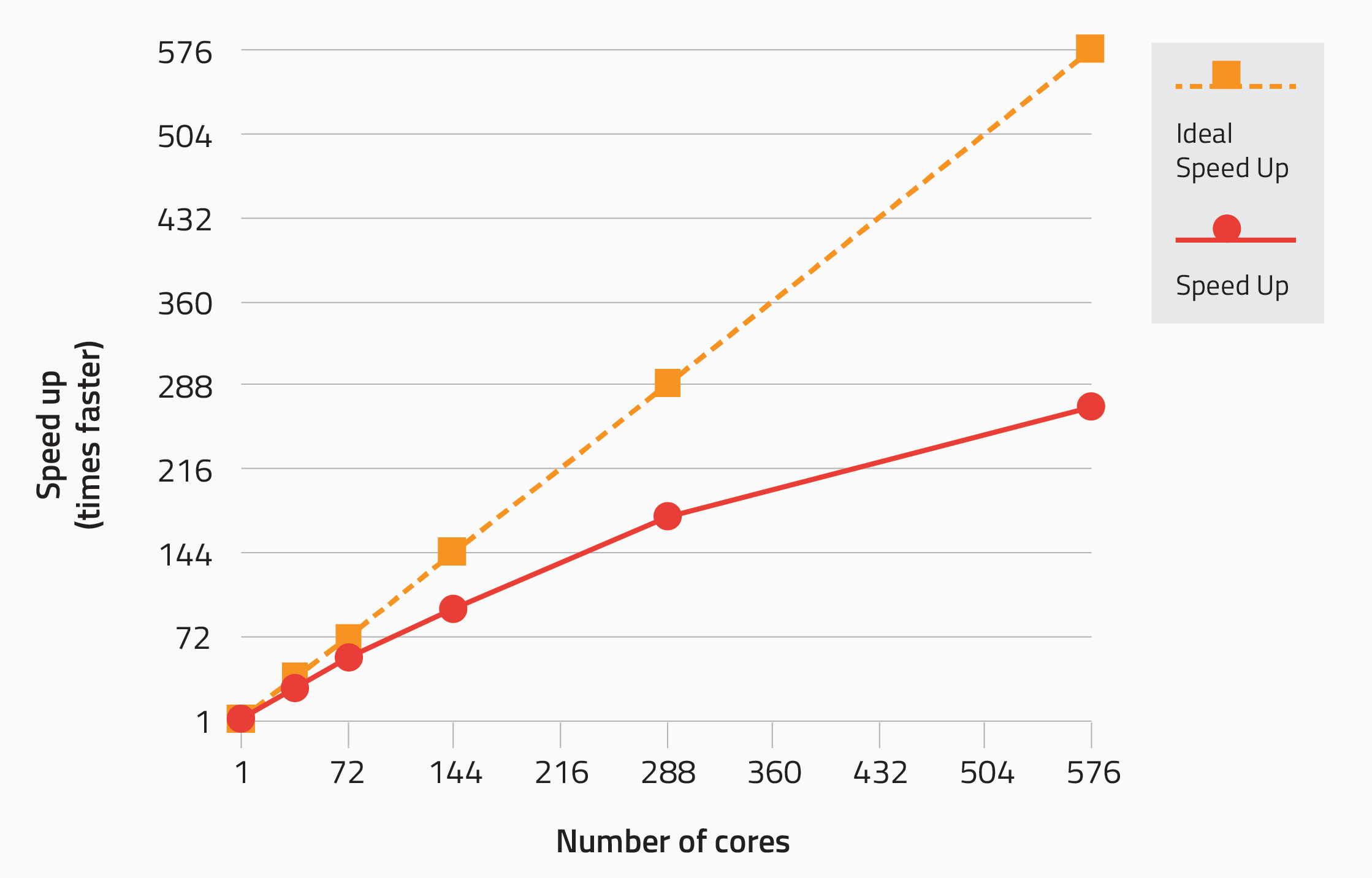

Cloud providers make available top-end CPUs connected by a fast network with a bandwidth of 100 Gbps [1], currently in the process of being upgraded to 200 Gbps [2]. We tested PFLOTRAN-OGS-1.3, a reservoir simulator parallelised by the means of MPI [3], on an AWS virtual cluster that makes available up to 576 cores. Each cluster node is powered by a 3.0 GHz Intel Xeon Platinum processor with 36 cores. A publicly available model of a potential UK CO2 storage site, named Bunter Closure 36 [4], was used for this test, refining its original grid to obtain a study with about 9.7 Million active cells.

As shown in the scalability plot, a speed up of 270 is observed when using 576 cores, with each core processing about 16,760 cells. The 576-core simulation starts about 5-10 minutes after being submitted using the OGS Web Application that functions as a cloud computing launcher [5].

Data upload and download times can be an issue, especially during model setup and initial testing. For the problem employed in the scaling tests above, for example, the size of the input and output datasets are 270 MB and 1.7 GB respectively after compression has been taken into account. With a relatively fast asymmetrical internet connection of 150 Mbps in download and 25 Mbps in upload, the input upload takes 80 seconds, while the output download requires 90 seconds.

When comparing these times with simulation times of hours, it is clear that the data transfer time is generally still small compared to the runtime. However, this task does still hold up the workflow somewhat. It is also worth mentioning that the largest part of the input file, such as the grid and its properties can be uploaded only once for multiple runs, and tools exist to perform the compression/decompression automatically when selecting a file for data transfer from/to the cloud.

Pricing

AWS offers a price of 0.12 USD per core-hour on a top-end CPU node connected via a 100 Gbps network. A cheaper rate is commonly available on a spot basis, but then the user is vulnerable to being out-bid at any time. Commercial resellers of software that makes available an easy-to-use virtual cluster may charge between 0.15-0.25 USD, depending on contract, usage, etc.

These costs may be compared with the in-house option. The raw cost of a core-hour, including equipment and electricity, varies between 0.03 to 0.05 USD, depending on regional power and installation costs. On top of this, one must account for the cost of hosting the server, and staff to maintain the system and network. These two costs add another 0.05 USD per core-hour [6].

This brings the total costs of a core-hour to about 0.1 USD, assuming a typical 80-85% usage. Not surprisingly, an in-house cluster can still be cheaper if well-used. The cloud solution may still offer advantages in terms of capacity and flexibility.

Security

Security is a concern for many reservoir engineers who fear sensitive data can be exposed when stored on an online server. Cloud platforms use sophisticated technologies similar to those employed by online banking to prevent data leakages and hacker attacks. Data is encrypted both when in transit and at rest, login systems are made resistant to attacks via multi-factor authentication strategies, and penetration tests are carried out on regular basis to identify and fix any potential fault and vulnerability. While a certain small risk remains, security policies deployed by cloud providers and their resellers do minimise the possibility of security accidents.

Other aspects of cloud computing

Currently, results are generally downloaded for post-processing. Streaming technologies offer the possibility of also doing post-processing of large reservoir models in the cloud. This would have the advantage of reducing download delays.

Conclusion

Cloud computing is a technology that has not yet fully penetrated the reservoir engineering simulation market. Current technology seems mature enough to carry out computations previously feasible only on a large local cluster. The firepower available to do high-resolution modelling and multiple realizations is a big attraction. For groups unable to maintain a large in-house computing resource, cloud computing offers a way forward. For larger groups with their own computing departments, the cost argument and possibly security concerns may favour of staying in-house. However, even then, cloud computing can still provide a top-up additional resources and access to state-of-the-art computing capability when required. The ideal solution may be a mix: ideally, the engineer having a simple and non-intrusive way of selecting local or cloud platforms.

References

[1] https://aws.amazon.com/hpc/efa/

[3] https://opengosim.com/pflotran-ogs-releases.php

[4] https://www.eti.co.uk/programmes/carbon-capture-storage/strategic-uk-ccs-storage-appraisal

[5] https://opengosim.com/ogs-web-app.php

[6] https://resources.rescale.com/the-real-cost-of-high-performance-computing/